About the untested side effects we’re overlooking in the race to AI implementation. What if you were offered a new medication, promising to transform your life, boosting your productivity, helping you make better decisions, and freeing up more time for what truly matters?

Now imagine discovering that this medicine had never undergone clinical trials, had no documented side effects, and was being administered simultaneously to billions of people worldwide without their explicit consent.

We would consider such a scenario deeply unethical in healthcare. Yet this is precisely what’s happening with artificial intelligence today.

The largest untested experiment in human history

The rapid deployment of AI across every sector of society represents the largest untested experiment in human history. Unlike medicine, where rigorous testing, ethical reviews, and careful implementation are fundamental requirements, AI systems are being integrated into our workplaces, decision-making processes, and social institutions with astonishing speed and minimal oversight.

This isn’t just another technological shift—it’s a fundamental reimagining of how humans work, create, decide, and relate to one another. The stakes couldn’t be higher, yet the caution couldn’t be lower.

Technology companies release increasingly powerful models with little understanding of their long-term impacts. What began as non-profit initiatives with ethical guidelines transform overnight into profit-driven enterprises when the pressure to scale computing resources intensifies. Ethical concerns that once seemed paramount are quietly shelved in the race to market dominance.

Meanwhile, organisations feel the pressure to adopt these technologies or risk obsolescence.

Executive boardrooms resonate with anxiety about competitors gaining an AI advantage. Strategic questions about purpose and long-term impact give way to tactical concerns about implementation speed. What’s lost in this rush is thoughtful consideration of how these tools will transform organisational culture, redistribute power, and reshape human roles.

Consultancies have emerged as the supposed experts in this chaotic landscape. Many promise painless transformations and quick returns, selling solutions without fully understanding the systems they’re helping to implement. Few take the time to consider the ethical responsibilities inherent in advising on technologies with such profound implications.

And governments—the traditional backstop for protecting public interest—find themselves remarkably unprepared. Regulatory frameworks designed for previous technological eras struggle to address AI’s unique challenges. Politicians and policymakers attempt to understand and govern systems that even their creators cannot fully explain or predict.

The human impact beyond efficiency gains

The human impact of this experiment is already becoming apparent. Jobs disappear without adequate transition plans. The skills gap widens between those who can work with AI and those who cannot. Psychological stress grows as workers wonder whether their expertise will remain relevant. Critical decisions once made by experienced humans are increasingly delegated to systems optimised for efficiency rather than wisdom or compassion.

The consequences extend beyond the workplace. As AI adoption accelerates unevenly, inequality grows between organisations, regions, and individuals with access to these tools and those without. The environmental costs of massive computing infrastructure required for training and running advanced AI models mount quietly in the background, but this is only a technical problem.

Most concerning is the subtle cultural shift in how we value human contribution—what happens to our sense of purpose when what we once considered uniquely human capabilities are replicated by machines?

This isn’t an argument against AI advancement. Rather, it’s a call for implementation that matches the power of these tools with appropriate care and consideration.

What would it look like if we approached AI with the same caution we apply to medical interventions?

A human-first approach to navigating the threshold

We find ourselves in what anthropologists call a liminal space—a threshold between what was and what will be. This transitional period offers a rare opportunity to thoughtfully design how these powerful technologies will integrate into our human systems. But this requires resisting the pressure to rush ahead without reflection.

A human-first approach to AI implementation begins by inverting the typical questions. Rather than asking “How quickly can we deploy this technology?” we might ask “How might this technology best serve human flourishing?” Instead of “How many jobs can we automate?” perhaps “How can this technology enhance human capability and meaning?”

This approach isn’t merely idealistic, it’s practical. Organisations that implement AI without employee engagement often face resistance, underutilisation, and ultimately failed initiatives. Those that fail to establish ethical frameworks and governance structures find themselves navigating increasingly complex moral terrain without a compass. And businesses focused solely on short-term efficiency gains miss the deeper transformational opportunities these technologies offer.

The way forward requires balancing innovation with human considerations. It means creating space for reflection amidst pressure for rapid adoption. It demands that we expand our metrics beyond efficiency and growth to include human thriving, environmental sustainability, and societal benefit.

Most importantly, it requires acknowledging that we are all—technology companies, organisations, consultancies, governments, and individuals—participants in an unprecedented experiment. And with that acknowledgment comes responsibility: to proceed with appropriate caution, to monitor impacts carefully, and to be willing to adjust course when necessary.

The choice before us isn’t whether to embrace AI or reject it. The question is whether we’ll shape these technologies to enhance what makes us human or allow ourselves to be shaped by technologies we’ve deployed without sufficient reflection.

What if we treated AI implementation like medicine—with careful testing, ethical oversight, and a commitment to first do no harm? The future of work—indeed, the future of being human—might depend on our answer.

Before you go, here are two resources that build on the themes of AI implementation:

- The AI Workforce Transformation — Discover why aligning automation with human potential is the defining challenge for future-ready organisations.

- What-if: The Liminality Toolkit (AI Edition) — A provocative framework to help leaders think more creatively and ethically about AI integration.

Further Readings about AI implementation

Navigating the emerging split between those who direct AI and those directed by it. Executive summary As artificial intelligence transforms workplaces globally.

What if we get rid of convenience?

Delve into the unintended consequences of modern conveniences and how they impact human engagement, cognition, and social interactions in the digital age.

Loyalty and Purpose: A blueprint for ethical business success leveraging AI?

Loyalty in businesses today has been mostly reduced to a transactional relationship. Points, discounts, and perks dominate loyalty programs, often neglecting the deeper meaning of loyalty as a moral commitment to shared values and purpose.

Human-First AI Implementation

Navigating the Threshold of Transformation. Ethical, sustainable, and impactful AI solutions for businesses ready to evolve

Plus

McKinsey: The State of AI in 2024

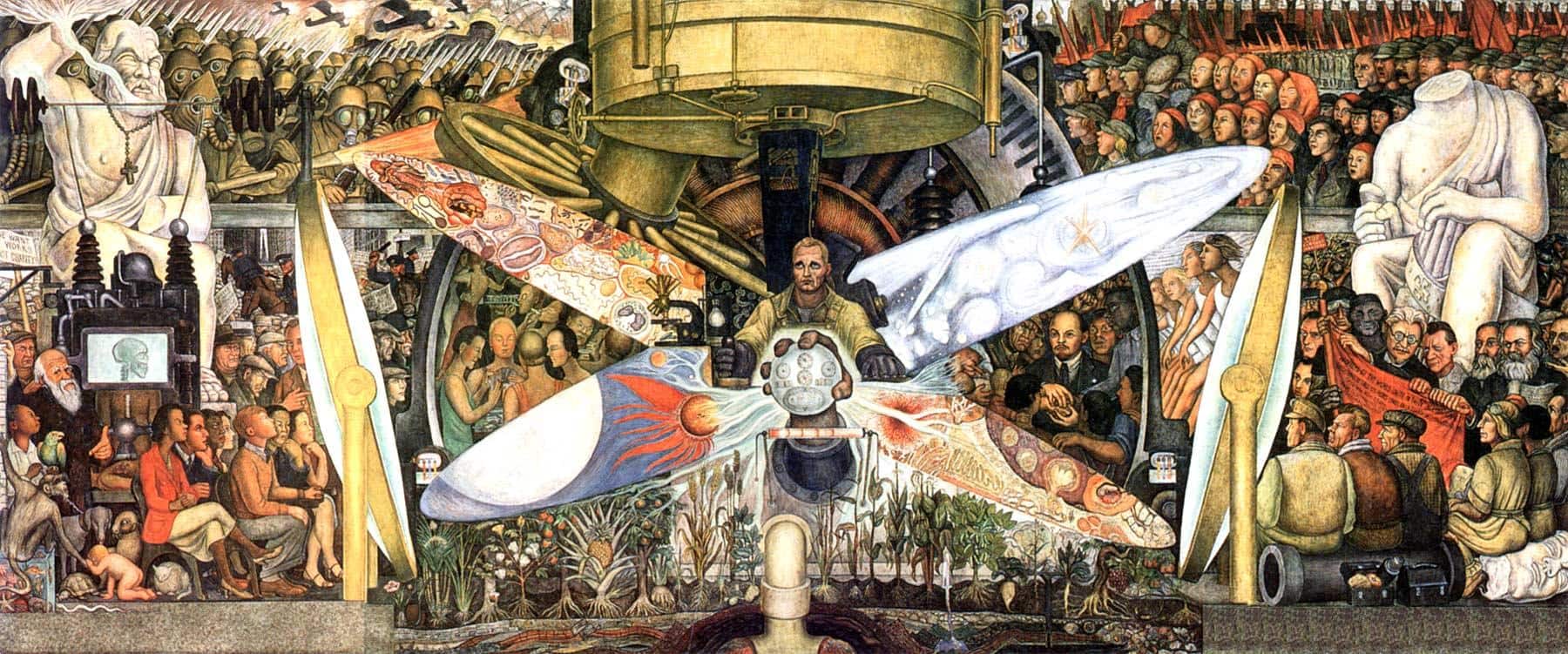

Artwork: Diego Riviera, Mural “Man at the cross-roads” (1934)