Navigating the emerging split between those who direct AI and those directed by it.

Executive summary

As artificial intelligence transforms workplaces globally, a significant divide is emerging between two classes of workers: those who direct AI systems and those who are directed by them.

This “agency gap” raises profound questions about the future of work, human dignity, and societal structure. This article examines the implications of this divide and proposes frameworks for more equitable and human-centred AI integration.

The prompter-prompted pattern

Consider two professionals in the near future:

Emma, a digital strategist, spends her day crafting AI prompts to generate content variations, selecting and refining outputs, and designing automation workflows that eliminate tedious tasks. She exercises significant agency in directing intelligent systems to achieve business objectives.

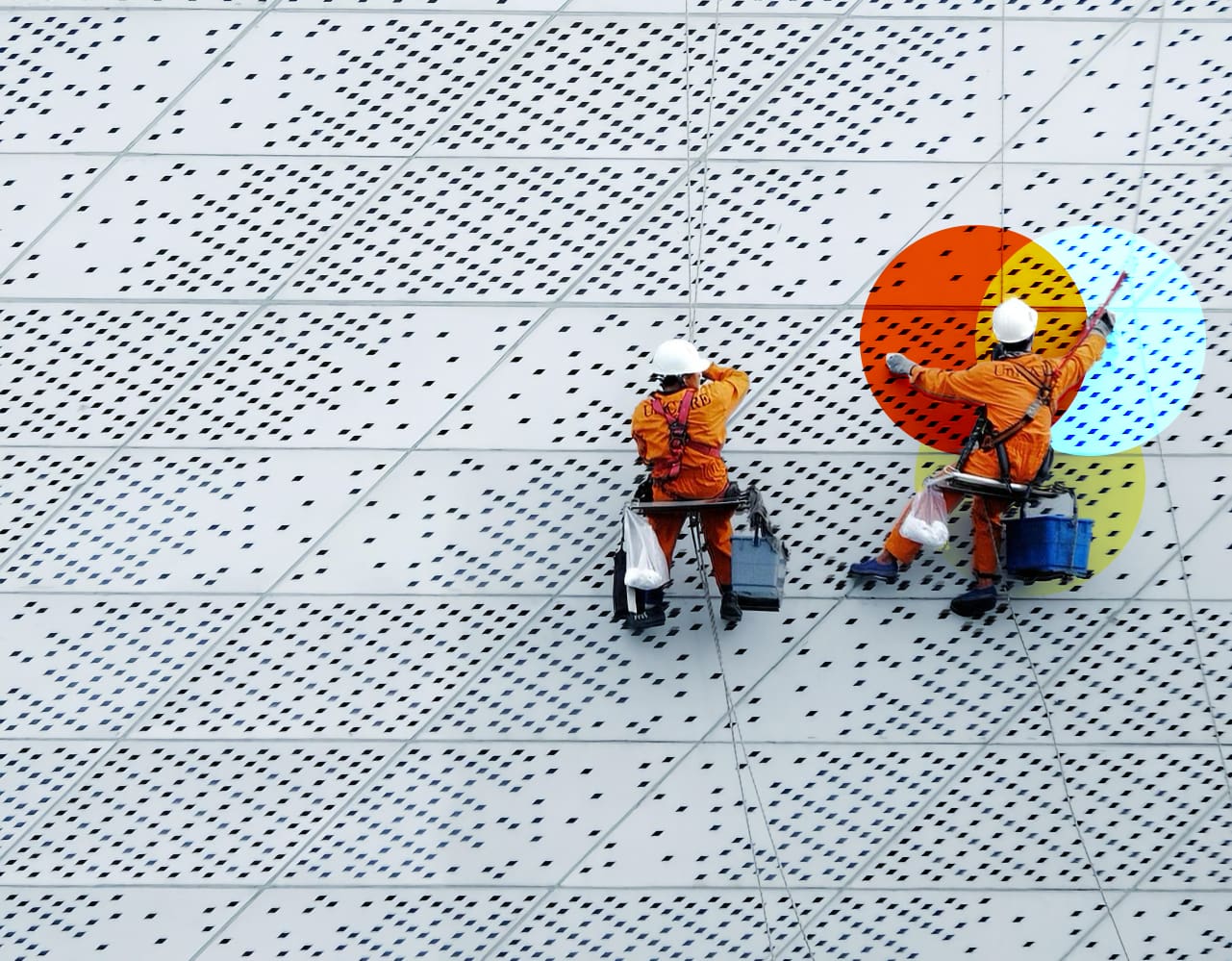

Jacob, a logistics worker, follows precise instructions delivered through an AI-powered headset that dictates his movements, monitors his efficiency, and reports deviations to management. Even during breaks, the system continues tracking and suggesting performance improvements.

Both work with AI, but with a fundamental difference: Emma directs AI; Jacob is directed by AI.

This divide is not speculative—it is already emerging across industries, recreating traditional power hierarchies in technological form.

Beyond professional environments

This agency division extends beyond the workplace. Consider how content discovery has transformed from active exploration (visiting cinemas or local video stores, engaging with knowledgeable staff) to algorithm-driven recommendations that narrow rather than expand our options.

A recent conversation with a colleague highlighted this tension. He envisioned AI systems creating personalised entertainment experiences that would eliminate the “inefficiency” of searching for content. Yet this convenience comes at a cost: the elimination of serendipitous discovery, diverse exposure, and the development of personal taste through active choice.

Both entertainment curation and workplace management reveal the same pattern: systems designed to optimise efficiency often quietly diminish human agency.

Quantifying the emerging hierarchy

The World Economic Forum projects AI will displace 85 million jobs by 2025 while creating 97 million new ones.

However, these statistics obscure a crucial qualitative shift. The new roles will largely require specialised skills in designing, managing, and interpreting AI systems—the “prompter” class. Meanwhile, traditional middle-skill positions are increasingly transformed into algorithmically-managed roles with diminished autonomy—the “prompted” class.

This division creates a significant paradox. AI was intended to liberate human creativity from routine tasks, yet for many, it is implementing new forms of digital Taylorism. Factory workers once followed instructions from managers applying “scientific management”; now workers across sectors follow instructions from algorithms applying “machine learning optimisation.”

More concerning, this algorithmic management often represents a transitional phase before complete automation.

Once human work has been reduced to algorithm-directed tasks, organisations have effectively created a blueprint for eliminating those positions entirely.

The “prompted” class faces not only reduced autonomy in the present but potential elimination in the future.

Socioeconomic implications

The agency divide has profound implications beyond immediate workplace dynamics:

- Economic stratification: Primary economic benefits flow to technology owners and investors. Goldman Sachs estimates AI could displace 300 million jobs globally, with most affected workers lacking capital to participate in the new economy.

- Identity disruption: With employment central to modern identity, displacement creates existential challenges. Research indicates 47% of displaced workers report diminished self-worth.

- Health consequences: Job loss correlates with a 33% increased depression risk and 20% higher mortality rates. Even employed individuals experience heightened anxiety about technological obsolescence.

- Social reconfiguration: We observe the emergence of “techno-elite” versus “digitally excluded” classes, with significant implications for democratic participation and social cohesion.

The convenience paradox

This agency divide reflects a broader pattern in technological development that consistently prioritises efficiency over autonomy and meaning.

Each technological wave promises liberation from tedious tasks—and delivers on that promise.

However, we must question: liberation from what, and for what purpose? If AI eliminates the need to make choices about content consumption, learning pathways, or work processes, is that genuine freedom? Or does it represent a subtle infantilisation where autonomy is exchanged for convenience?

Contemporary technology increasingly quantifies human experience into metrics and rewards engagement through gamification mechanisms. These contradictions have become so normalised that they often go unquestioned.

Particularly concerning is how readily organisations implement automation without considering its impact on human capability and dignity.

Human-to-human interactions are replaced with algorithmic management for efficiency gains without examining whether efficiency should be the primary organisational value.

Towards a balanced integration model

The future is not predetermined. The agency divide reflects choices in how we design and deploy technology. Alternative approaches exist.

Progressive organisations are implementing more balanced models of AI integration:

- Worker-directed augmentation: Employees identify appropriate automation opportunities rather than having automation imposed from above

- Transparent algorithmic systems: AI systems explain their reasoning and allow human override when necessary

- Complementary teaming: AI manages routine aspects while humans maintain decision authority and creative direction

- Upward mobility pathways: Structured programmes that prepare workers to transition into the “prompter” class rather than remaining perpetually “prompted”

These approaches recognise that technology should enhance human potential rather than merely increase efficiency. They acknowledge that work provides more than economic value—it offers meaning, community, and identity.

Policy frameworks for equitable transition

While organisational initiatives are valuable, addressing the agency divide ultimately requires systemic intervention. Market forces alone cannot ensure equitable outcomes given the magnitude of this transformation.

A recent question from the HumanX AI Salon captures a key consideration: “Could AI help create more fulfilling jobs?”

This should be a mandatory consideration for any organisation implementing automation. The right to meaningful work and self-determination must be protected through appropriate governance frameworks.

New social contracts must:

- Establish rights to meaningful work and technological self-determination

- Create robust transition pathways for displaced workers

- Ensure equitable distribution of automation benefits

- Protect spaces for human autonomy and agency

- Prioritise human wellbeing over narrow efficiency metrics

This is not about impeding technological progress but directing it toward human-centred outcomes. The future cannot be prevented, but it can be shaped through collective agreement on prioritising humanity and sustainability.

Reframing the relationship

Returning to Emma and Jacob, a third path is possible. What if both could exercise meaningful agency in their relationship with AI? Or if technology augmented human capability rather than replacing or directing it? What if systems preserved space for serendipity, exploration, and autonomous choice?

The agency divide transcends workplace dynamics—it shapes what kind of society we are building. Will a small class of “prompters” design systems that the majority merely follow? Or will technology expand everyone’s capacity for creativity, meaningful choice, and self-determination?

The solution lies not in rejecting technological advancement but in establishing appropriate governance to ensure it serves human needs.

This requires moving beyond simplistic techno-optimism or reflexive resistance toward a nuanced approach that aligns technological progress with human flourishing.

As we navigate this pivotal moment, our choices will determine whether AI enhances human dignity and expands prosperity or exacerbates inequality and diminishes agency. The technology itself is neutral—our values, policies, and design choices will determine which side of the agency divide most people experience.

The defining question is not what AI will do to us, but what we will do with AI. Will we use it to control or to empower? To narrow human possibility or to expand it? To concentrate agency or to distribute it?

The answer depends not on technological inevitability but on human choice—on our collective commitment to ensuring technology serves our highest aspirations rather than merely market efficiencies.

This article was originally published as part of Liminal Discovery’s ongoing exploration of emerging technological and social dynamics. For more information about our human-centred design and innovation services, please contact our team.

External resources:

- World Economic Forum: Future of Jobs Report 2025

- McKinsey Global Institute (2024): “The economic potential of generative AI: The next productivity frontier

- MIT Sloan Management Review (2024): “Human-AI Collaboration: A Dynamic Relationship“